Agentic Coding

in the World

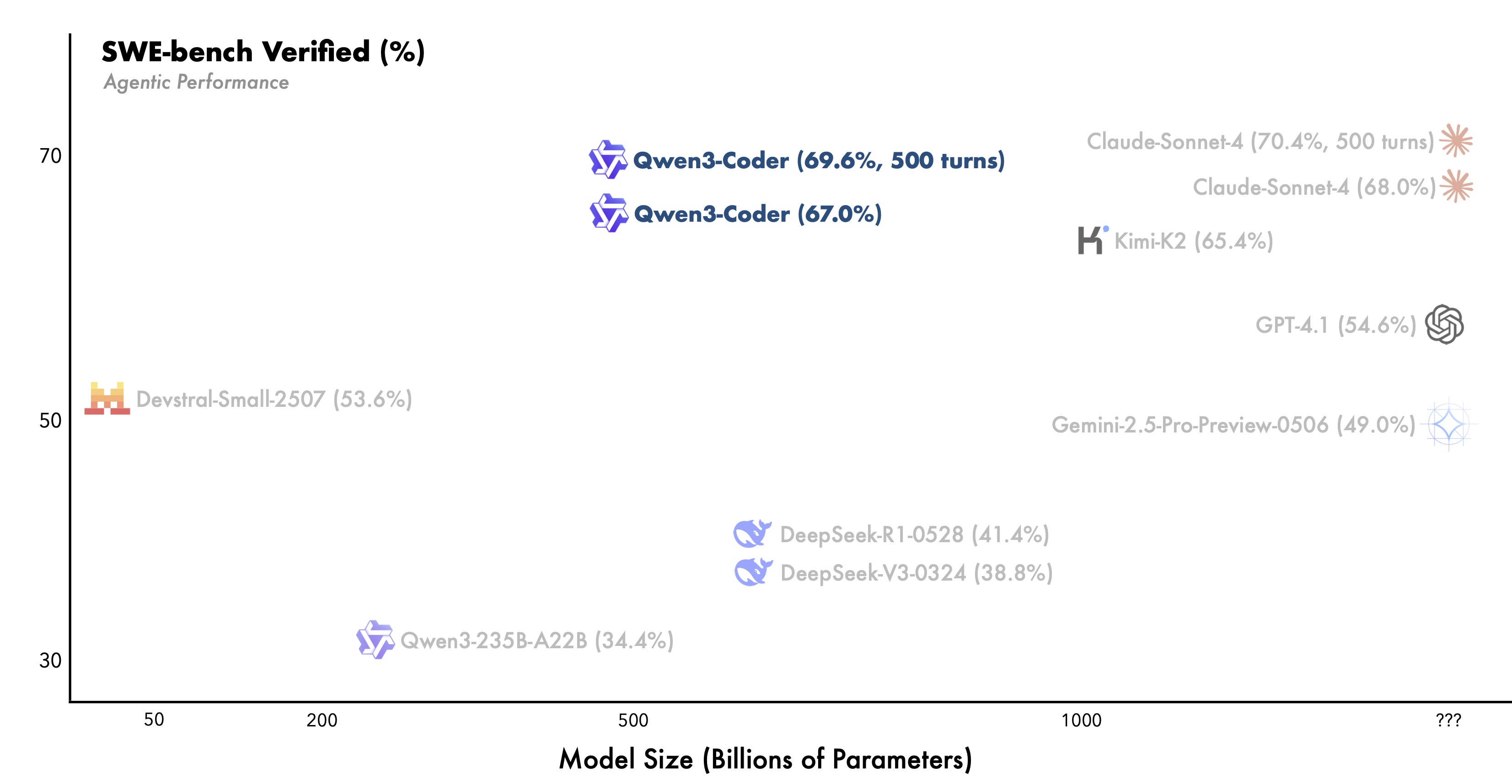

480B-parameter Mixture-of-Experts model with 35B active parameters. Native 256K context, expandable to 1M tokens. State-of-the-art performance comparable to Claude Sonnet 4.

The Most Agentic Code Model

Breakthrough Performance in Real-World Software Engineering

Qwen3 Coder represents a new paradigm in AI-assisted development. With massive scale and specialized agentic capabilities, it excels at complex, multi-step coding tasks that require planning, tool use, and iterative problem-solving.

Massive Scale

480B-parameter Mixture-of-Experts architecture with 35B active parameters for unparalleled coding intelligence

Extended Context

Native 256K token context, expandable to 1M tokens for comprehensive repository-level understanding

Agentic Intelligence

Advanced reasoning for multi-turn interactions, planning, tool usage, and complex software engineering tasks

State-of-the-Art

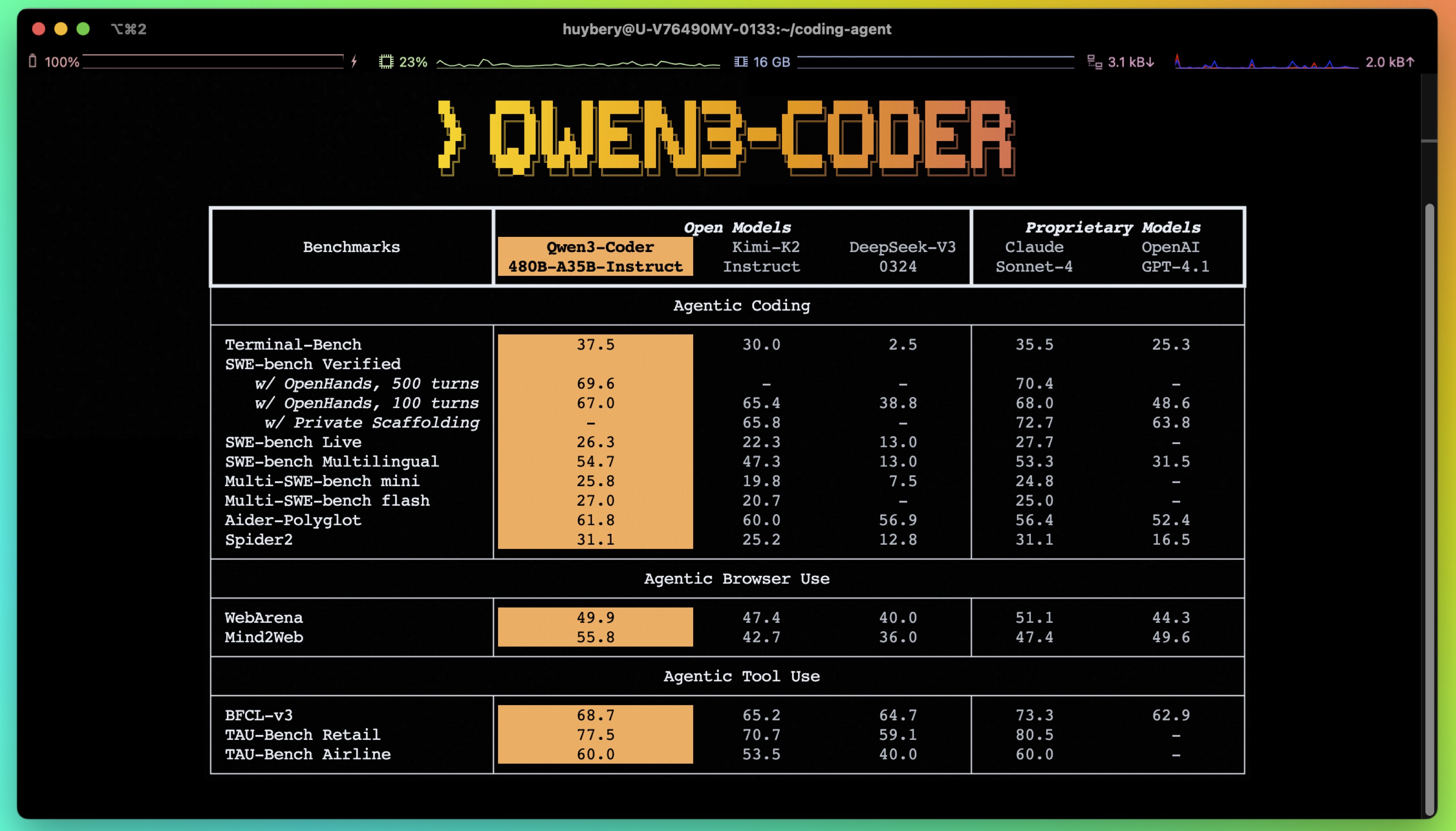

Comparable to Claude Sonnet 4 on Agentic Coding, Browser-Use, and Tool-Use benchmarks

Advanced Training Methods

Hard to Solve, Easy to Verify

Scaling Pre-Training

- 7.5T tokens with 70% code ratio

- Native 256K context support

- YaRN extension up to 1M tokens

- Optimized for repo-scale data

Code RL at Scale

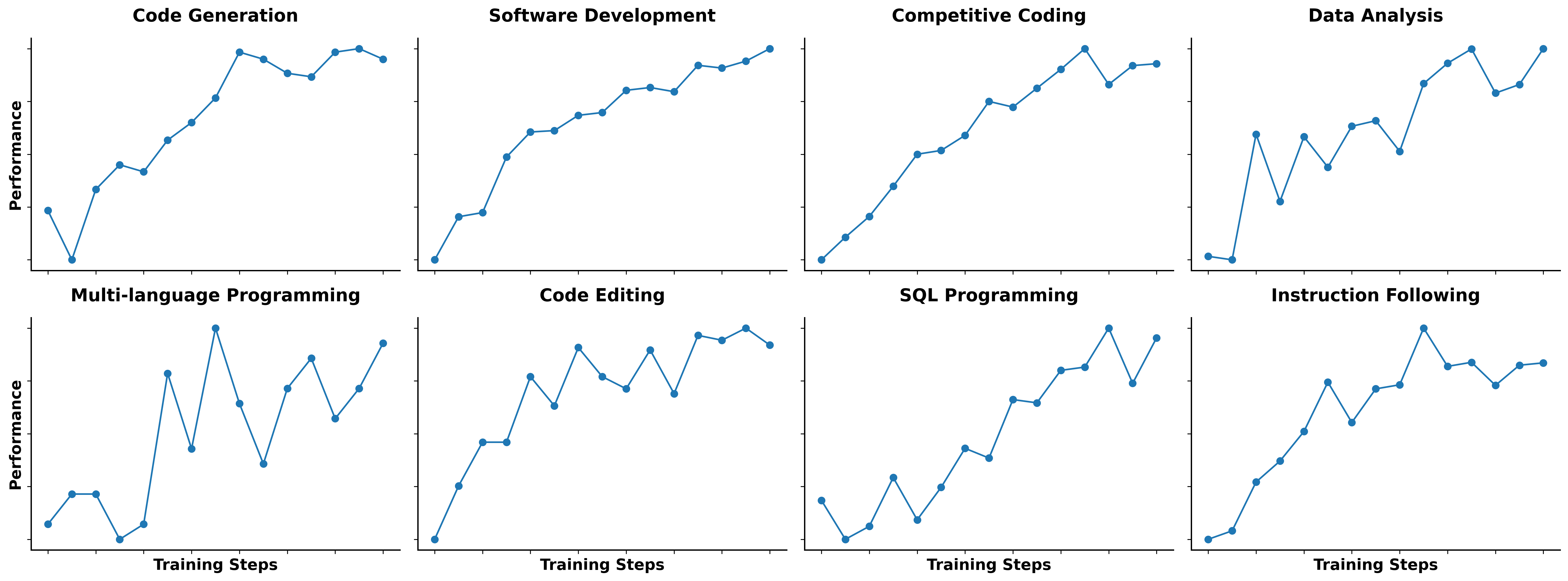

Large-scale reinforcement learning on diverse real-world coding tasks with automatic test case generation

- Significantly boosted execution success rates

- Improved performance across all coding tasks

- Real-world problem-solving capabilities

Long-Horizon Agent RL

Multi-turn interaction training with 20,000 parallel environments on Alibaba Cloud infrastructure

- State-of-the-art SWE-Bench Verified performance

- Advanced planning and tool usage

- Real-world software engineering tasks

Scaling Code RL: Hard to Solve, Easy to Verify

Unlike the prevailing focus on competitive-level code generation, we believe all code tasks are naturally well-suited for execution-driven large-scale reinforcement learning.

- Automatically scaling test cases of diversity coding tasks

- High-quality training instances creation

- Unlocking the full potential of reinforcement learning

Scaling Long-Horizon RL

In real-world software engineering tasks like SWE-Bench, Qwen3-Coder must engage in multi-turn interaction with the environment, involving planning, using tools, receiving feedback, and making decisions.

- 20,000 independent environments in parallel

- Leveraging Alibaba Cloud's infrastructure

- State-of-the-art SWE-Bench Verified performance

Seamless Developer Integration

Works with Your Favorite Tools

Qwen Code CLI

Research-purpose CLI tool adapted from Gemini CLI with enhanced parser and tool support

Claude Code Compatible

Use Qwen3-Coder with Claude Code through proxy API or router customization

Cline Integration

Configure as OpenAI Compatible provider with custom base URL

Direct API Access

RESTful API access through Alibaba Cloud Model Studio

Real-World Applications

From Code Generation to Complex Software Engineering

Repository Analysis

Understand entire codebases with 256K-1M token context window

Multi-Step Debugging

Autonomous problem-solving with iterative debugging and testing

Architecture Planning

System design and technical decision-making for complex projects

Code Refactoring

Large-scale refactoring with dependency analysis and safety checks

Frequently Asked Questions

What makes Qwen3 Coder 'agentic'?

Qwen3 Coder can engage in multi-turn interactions, plan complex tasks, use tools autonomously, and iteratively solve problems - going far beyond simple code completion to act as an intelligent coding agent.

How does the 480B parameter architecture work?

Qwen3 Coder uses a Mixture-of-Experts model with 480B total parameters but only 35B active parameters per inference, providing massive capability while maintaining efficiency.

What's the context window capability?

Native support for 256K tokens, expandable to 1M tokens with YaRN. This allows analysis of entire repositories and complex, long-form coding tasks.

How does Code RL training benefit me?

Code RL training on diverse real-world tasks means Qwen3 Coder generates code that actually works in production, with high execution success rates and practical problem-solving abilities.

Can Qwen3 Coder replace Claude Sonnet 4?

Qwen3 Coder achieves comparable performance to Claude Sonnet 4 on Agentic Coding benchmarks while being specifically optimized for software engineering tasks.

What tools does Qwen3 Coder integrate with?

Qwen3 Coder works seamlessly with Qwen Code CLI, Claude Code, Cline, and any OpenAI-compatible interface through our API endpoints.

Is Qwen3 Coder suitable for enterprise use?

Yes! With scalable infrastructure on Alibaba Cloud, enterprise-grade API access, and proven performance on complex software engineering tasks.

How do I get started with Qwen3 Coder?

Install Qwen Code CLI via npm, get API credentials from Alibaba Cloud Model Studio, or integrate directly with your existing development tools.

Ready to Experience Agentic Coding?

Join the revolution in AI-assisted software development